Apple’s commitment this year to promote the Shortcuts app, formerly known as Workflow, has spurred excitement among a growing group in the iOS community. Shortcuts allows users to automate iPhone/iPad tasks, analogous to the Mac’s Automator. While this app is accessible to those without any development experience, I’d like to share the experience of developing my first Shortcut as an iOS developer. Additionally, I hope the resulting Shortcut will save some Jira users some time. First, here is the background on the process I chose to optimize with Shortcuts.

Nearly everybody involved in creating software needs tools for documenting software bugs. The most ubiquitous tool for tracking bugs is Jira which I use daily. To track a new bug in Jira I stumble upon on an iOS device, I follow these steps:

- Capture a screenshot or video of the bug on my iPad/iPhone.

- Create a case in Jira documenting the bug.

- Annotate or trim the aforementioned media.

- Upload and attach the media to the Jira case.

- Delete the media from the iPad/iPhone.

This process seems simple enough in theory but has some minor challenges. My iPhone and iPad Photos app is littered with software screenshots, some months old that I neglected to delete after uploading to Jira. Attaching the screenshot to the case can also be cumbersome as I need to move the media to the device that has the related Jira case open. The Jira App for iOS can make this simpler; however, I prefer to create cases on my Mac for speed of entering the details.

My aim was to optimize this with a Shortcut that can walk me through the steps of uploading my most recent media to my most recent Jira case and the subsequent media deletion. I was excited that Shortcuts was up for the task and jumped right in.

Shortcuts First Impressions

I’ll admit that I spent no time reading documentation or watching tutorials for Shortcuts before getting started. I think that speaks to the approachability of Shortcuts (and my impatience to play).

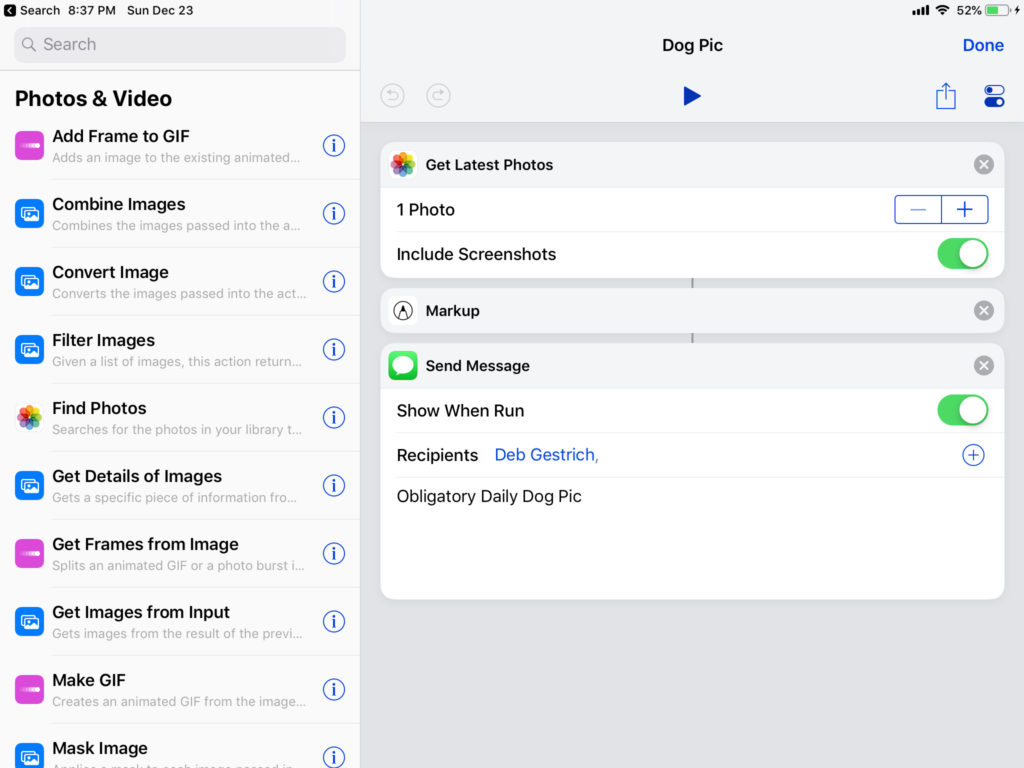

The Shortcut interface offers many options that require no background in development. Using Shortcuts’ drag-and-drop interface, one can compose actions to play a song, send a text message or search the web, for example, without any familiarity with programming languages. But much more power exists in this tool that will be familiar to developers and an excellent primer for those that want to learn more about development.

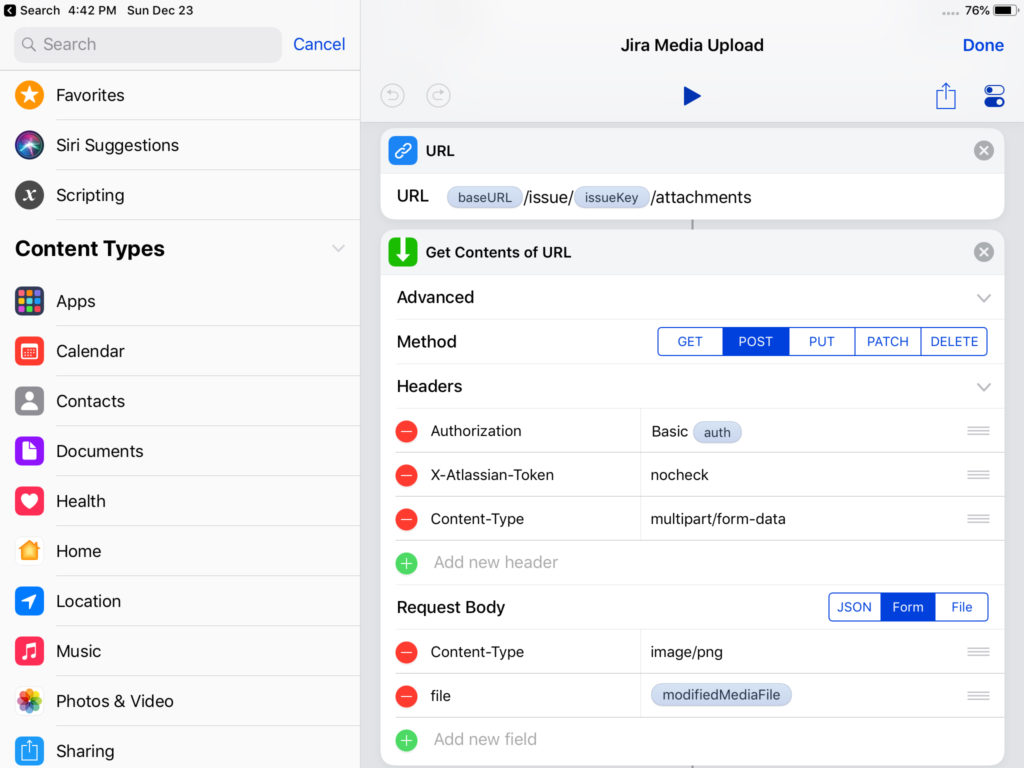

Some of the features familiar to developers are variables, conditional and control constructs, arrays/dictionaries and code comments — just to name a few. There are also powerful APIs available to fetch data from from the web, parse JSON and even SSH to other devices (and much more). I was delighted to find so much flexibility so I did not plan to limit the scope of this Shortcut initially.

Lessons Learned

Like many complex apps, Shortcuts will be more efficient to develop on an iPad than an iPhone. The larger form-factor and persistent sidebar makes it much easier to navigate the interface and add actions. The use of an external keyboard can help as well and I’m curious how the Apple Pencil would improve drag-and-drop functionality. .

But the iPhone makes it very convenient to develop Shortcuts during small idle periods when your iPad may not be on hand (Uber, bank line, etc..). This was a new experience for me and I got hooked to it the way many play games on their phones. I have some mixed feelings on the distractibility factor but developing in spare contexts was interesting.

This Jira Shortcut morphed into a more complex tool than I expected. It makes several API calls to Jira, processes the results, requests input from the user, shows error messages and more. As a developer, my inclination was to break these individual components into smaller Shortcuts for modularity and usability. This was clearly a wrong turn when I decided to share the shortcut which would have required a user to download multiple Shortcuts for one feature. So I ended up joining 3 different Shortcuts into one Shortcut as I neared completion.

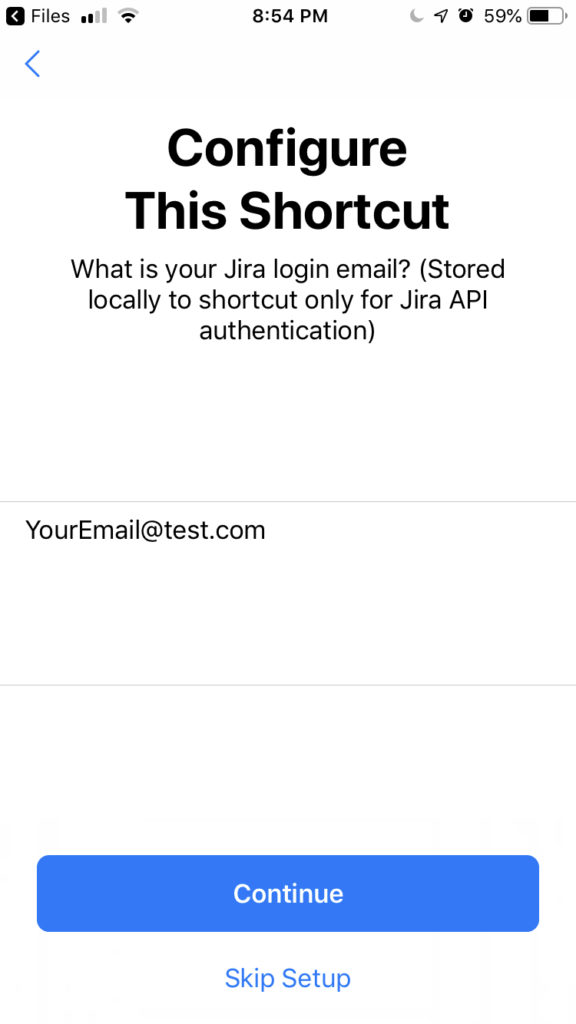

Security also required thought on this project. The Shortcut will need access to a user’s Jira credentials (username + password or token). All the actions and parameters for a Shortcut are stored to the Shortcut app’s sandbox. While storing usernames/passwords is not ideal, the primary risk I see here is a user accidentally sharing those credentials if they ever redistribute their Shortcut (“Hello World.. oops here is my login!”). I attempted to work around this by using import questions which will request sensitive user information on installation and not share those user inputs if that Shortcut is later shared. I have not verified this so caution against sharing any Shortcuts with personal information captured.

Conclusion

Nearing the end of this project, I realized there is a limit to how much additional functionality you want to pack into a Shortcut before you may want to consider writing an iOS app instead. It can be tricky editing a Shortcut since it lacks copy/paste support for actions and the lack of functions makes it difficult to reuse logic. I also missed simple common language features such as “else if” and “break/continue”. Finally, all this work to develop the Shortcut cannot be ported outside the context of the Shortcut (i.e. another iOS app, Mac, etc..).

But Shortcuts is not designed to be a replacement for traditional software development or apps. It is however an excellent tool for automating tasks on the iOS platform, even for iOS developers. I’d certainly use Shortcuts again when the task to automate is the appropriate complexity level. Then if it gets too complicated, I’ll consider writing an iOS app. I hope non-developers can use Shortcuts too as an introduction to software development in a familiar environment.

If you are a Jira user and wish to fiddle with this Shortcut with your account, you can download it on my Github. I welcome feedback and pull requests.